Hmm, sorry @Redsky, it looks like I misclicked and clicked the “edit” button instead of the “quote” one.  I restored your post based on my quotes.

I restored your post based on my quotes.

So here was my first post

You described here less distinct highlights and less harsh shadows.

As a preamble I want to remind we are delivering a game to users, we are not delivering screenshots to users.

Screenshots seen out of the gaming context and displayed in reduced form in a forum may bring a different feeling than experiencing the actual roaming within the rendered world while playing.

I didn't described less distinct highlights and less harsh shadows, I described the result of a computation.

To make a comparison, I'm not discussing the fact people may experience the dress as white and gold, but the fact it is blue and black so we should sample it blue and black before applying the light over it. Even if the result of the computation could produce the same pixel color we could get by sampling gold, we should implement the computation that produces that pixel color by sampling black and not gold.

Someone wanting more distinct higlights or more harsh shadows should set-up the lighting accordingly in the source materials, not rely on broken computes that may eventually look pleasing when framed in a selective screenshot while the render break everywhere else as soon as we look elsewhere.

Both are effects of more diffuse light. It can be the expected effect and wanted effect, based on how light/textures are treated, but here's the question: are we able to recreate harsh dynamic lighting in sRGB branch?

We never had harsh dynamic lighting, we had broken short light falloff, broken light bouncing, and broken light attenuation, (and we still have broken light backsplash as-a-point-light, but this is more minor)

We sometime had the lucky perception of harsh dynamic lighting when we aligned stars with the sacrifice of a goat, and it only worked if we didn't looked around or behind.

Q3map2 provides multiple lighting options when making maps, some options for hard direct sunlight (like under a sunny day), some options for more diffuse lighting (like under a cloudy day) and the ability to mix them, and things like that. We also have in engine a color grader that allows us some artistic creativity like modifying the saturation, the contrast or the tone of a whole scene. Those are the mechanism a creator should leverage to get what he has in mind.

I don't want to throw water onto the fire, but this post undercuts goal of showing off importance of accurate non-naive accounting for sRGB assets. That's because with any major change to renderer assets become de-synced from engine.

It is true that some of our assets were tested with a broken engine, it is obvious for the transparent textures.

I also suspect that some of specular maps from our oldest texture packs may have been. Or maybe I'm still too much accustomed to brokenness, like most of us, to fully accept the truth yet.

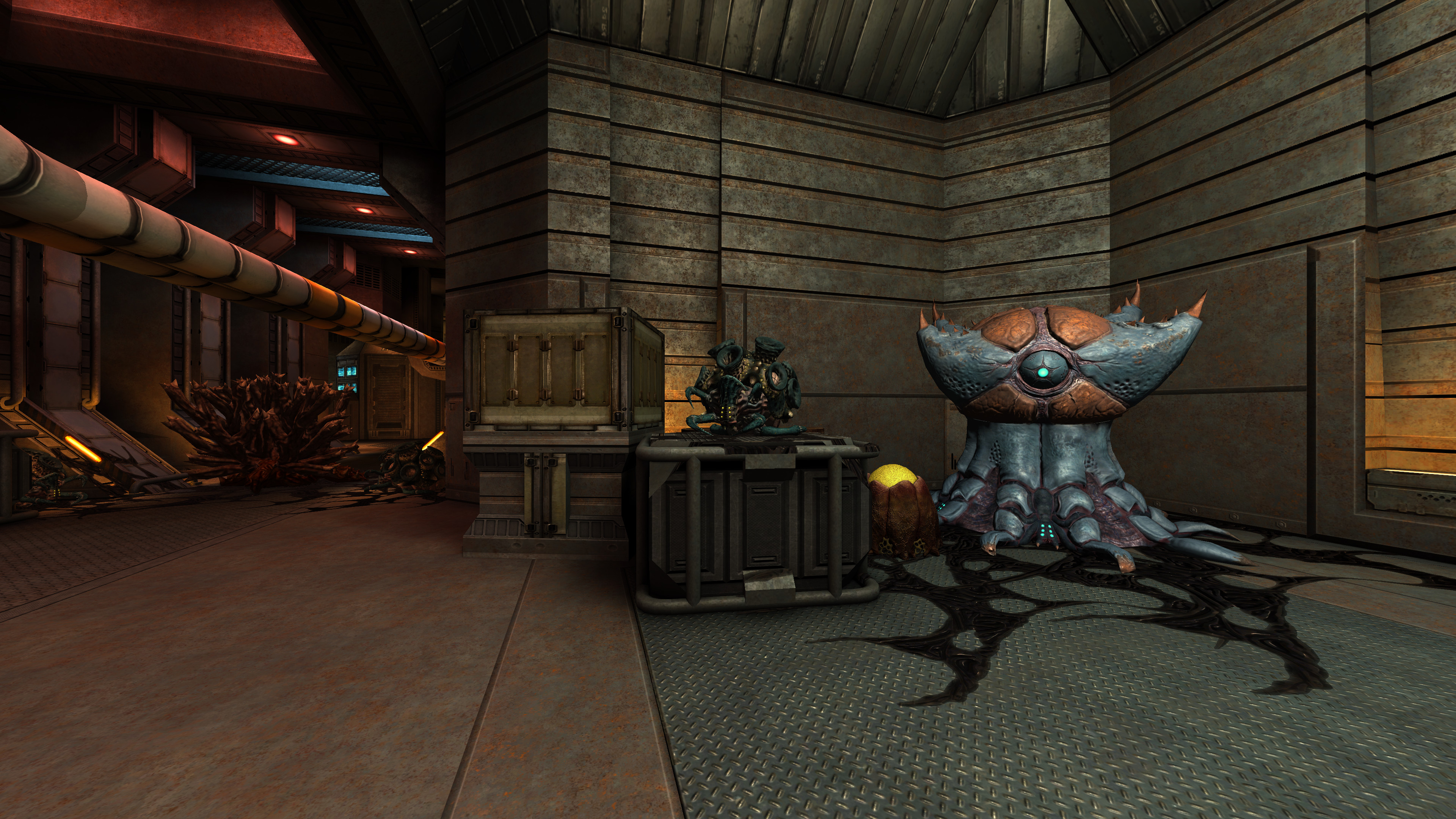

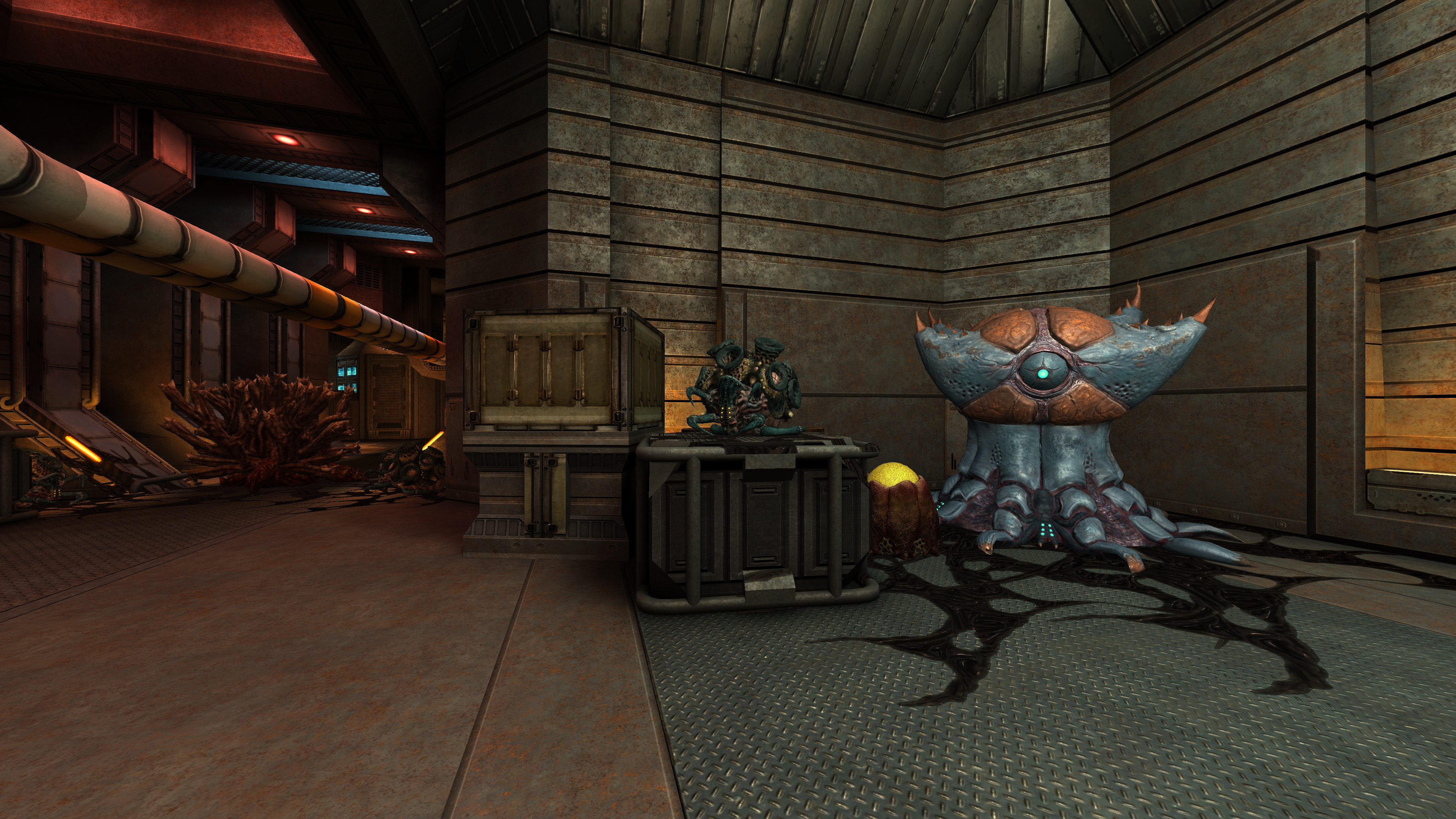

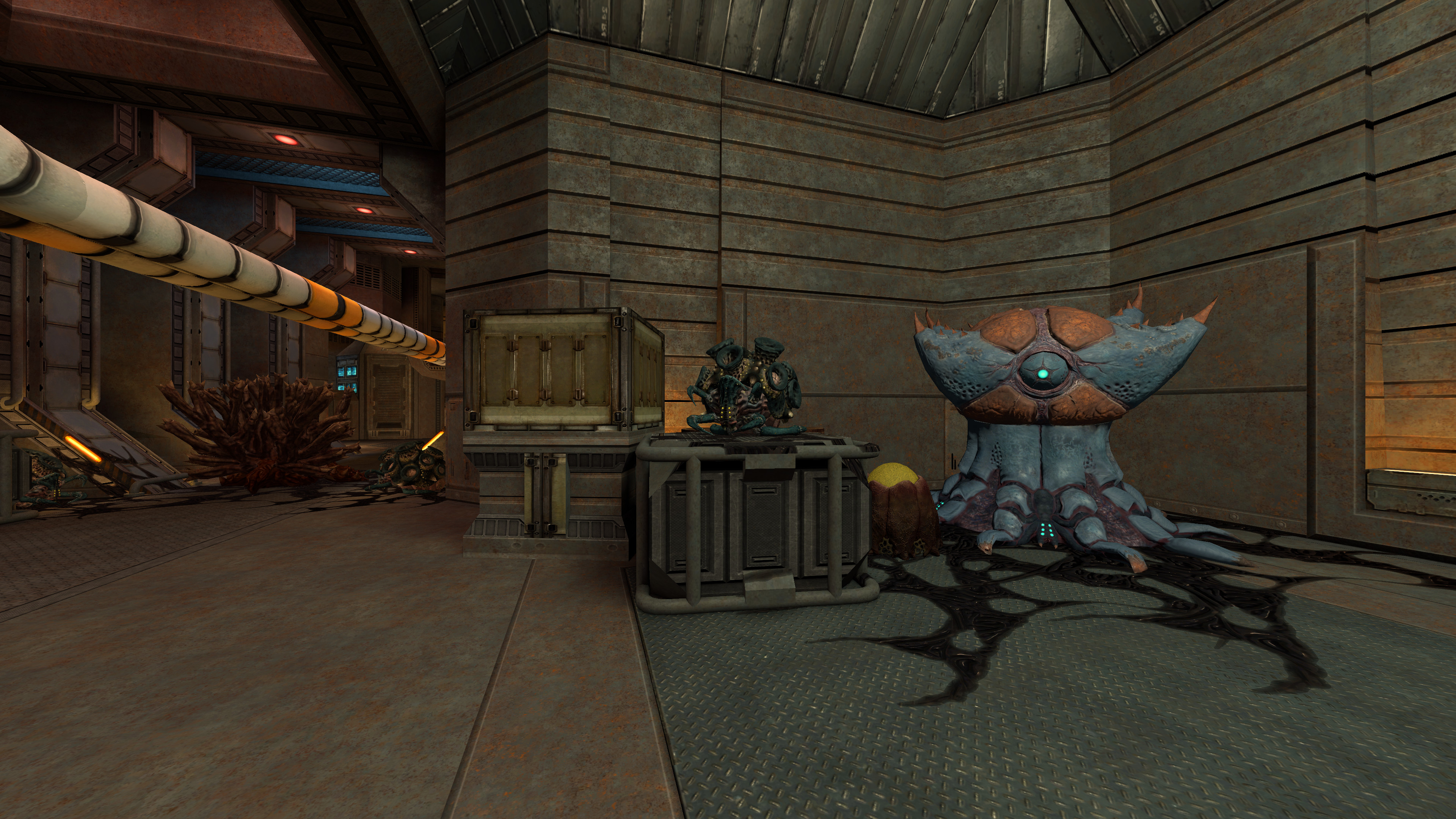

For example I may still believe something is wrong because it's not like it was before, while before was wrong but I'm accustomed to it. A model like the overmind falls into that range where I still have doubts. The overmind doesn't look broken in the new linear pipeline if I unlearn the way I look at it, but I'm accustomed to see it overly shiny sine years, so my first thought that the linear pipeline was breaking it. Then I spent more time experimenting with the branch and comparing with more models, and now I'm starting to feel the old overmind over shininess as buggy.

Also I know we modified some textures that did not looked in game like they looked in modeler's software, so we may have some select assets that may even be in some in-between state.

After tonemapping got introduced by Reaper geometry of maps is less obscured by the shadows and lights become much more saturated. The feature is a big win in my book, but I don't know if I could say that this is how maps were "supposed to look". The way I see the update providing a way for improvement upon the assets, that old rendering system didn't. To be more in line with original spirit of those maps one might start with desaturating lights and perhaps tweaking their strength and see what can be done from there, but the matter of fact is assets (maps) need to be updated.

I share the feeling of “win in the book, mixed opinion on the result”. I like the idea of tonemapping on the paper, but my experience of our current implementation is that it makes me feel it breaks both maps done for the non-linear and linear pipelines, I suspect it is not calibrated properly yet. I also believe tonemapping is a good thing to have because if I'm right this is a required foundation for the upcoming adaptative lighting. But right now there is something that really feels unnatural when looking at maps when the tonemapper is enabled. I'll probably start a dedicated thread on the tonemapper.

You assert sRGB branch fixes lighting errors of assets. I'm not convinced these 'lighting errors' aren't quirks of naive implementation and matter of taste. Even if textures were created 100% correctly by trustworthy process they still may have been intentionally tweaked(eyeballed) to match visual representations in the old engine 10+ years ago. I can say for sure that some of the textures were definitely put together haphazardly and deemed 'good enough' while others were never finished.

Of course many of our assets were just “put together haphazardly and deemed 'good enough' while others were never finished”, but something like the human model is obviously wrong with the old pipeline, it always has been, and my brain was never fooled to believe that the human model was meant to look like this.

Also, we have to keep in mind that even when a texture is not very good, it's already a win if the lighting itself is good, whatever the texture.

Some of our textures may look less good with the linear pipeline, but that is not an argument in favor of breaking the lighting. Once we get a proper lighting, we have a first win, when we fix such texture, we have a second win.

To conclude presenting full potential of changes you introduced requires assets tailor-made for the task.

A proposed solution:

produce or procure asset with trusted specular map

find light/exposure conditions in which naive implementation would obviously fail

optionally recreate renders in analogous conditions in a software that is capable of rendering without mangling sRGB textures/light

So,

produce or procure asset with trusted specular map

Yes, we should favor trusted specular maps or better, PBR maps (we definitely need to fix our PBR pipeline too)

find light/exposure conditions in which naive implementation would obviously fail

The Vega map is a good example where the naive implementation is obviously failing.

optionally recreate renders in analogous conditions in a software that is capable of rendering without mangling sRGB textures/light

Do you mean something like rendering in blender our map to check if the newly implemented pipeline in our engine does it right?

I'm concerned by the ability to cross-check what I implemented (I may have introduced bugs and take for granted something that is unknowingly broken). That's why I used Xonotic maps in my testing process because I can compare the same Xonotic map build in both DarkPlaces and the Dæmon engine. This only allows me to compare the pipeline in the renderer, not in the lightmapper though.

And here was my second post

I want to remind that the purpose of the engine is to bring newer pipelines to the game for newly produced content.

It doesn't conflict with our other goal to support legacy assets done the old way.

What is not a purpose of the engine and is not part of our goals, is to provide a way to produce newer assets the old way rendered by a newer pipeline and mixing fixes from today with brokenness from before. That goal would conflict with both the goal of bringing newer pipelines for newly produced content the way they are done today, and with the goal of supporting old assets like they were done before.

So to rephrase it, we have a goal to bring a modern and accurate linear pipeline supporting PBR workflows etc., and we have a goal to load the existing maps done with techniques from 1999, but we don't have as a goal to mix a PBR workflow with a broken lighting from 1999.

Regarding old assets, we maintain the legacy behavior, and I'm known to be strongly advocating for that, but when producing new content, we should not handicap ourselves with legacy brokenness, and we should not handicap contributors and newcomers with such legacy brokenness.

Someone coming tomorrow and starting to create a map, a mod, or a whole game on the Unvanquished game or Dæmon engine should have to never care about the legacies. Such one should be able to start with a fully linear pipeline and PBR workflow without having to know about anything legacy.

So yes, after merging the linear pipeline branch and we start using it with newly created maps or rebuilt ones, we would have to do some iterative minor changes to improve the looking of our assets, but we have to keep in mind those changes may even not be fixes, but just us leveraging the engine to finally be able to do things we could not do before because of brokenness.

The matter is not new. The same concern arose when we switched to non-fast broken light fall off, and the concern will arise again when we will switch to backsplash lights the area light instead of the broken point light way like Q3 did in 1999. Of course, every time we fix something in the lighting, we may have to reconsider what we have. But we will end there anyway, today or tomorrow, so we better do it as soon as we can. We can't avoid the fate, so we better not delay it as long as we are alive. The only way to avoid such fate is to have the project dying before we achieved the correctness of the engine, something that is not what we want.